Here are examples of virtual reality artworks, medical and educational virtual and augmented reality apps made by the team. As part of the Know Thyself project, the team are currently developing two new virtual reality projects and also new open source tools to allow other students, creatives and healthcare practitioners to easily work with medical scan data in virtual reality.

My Data Body

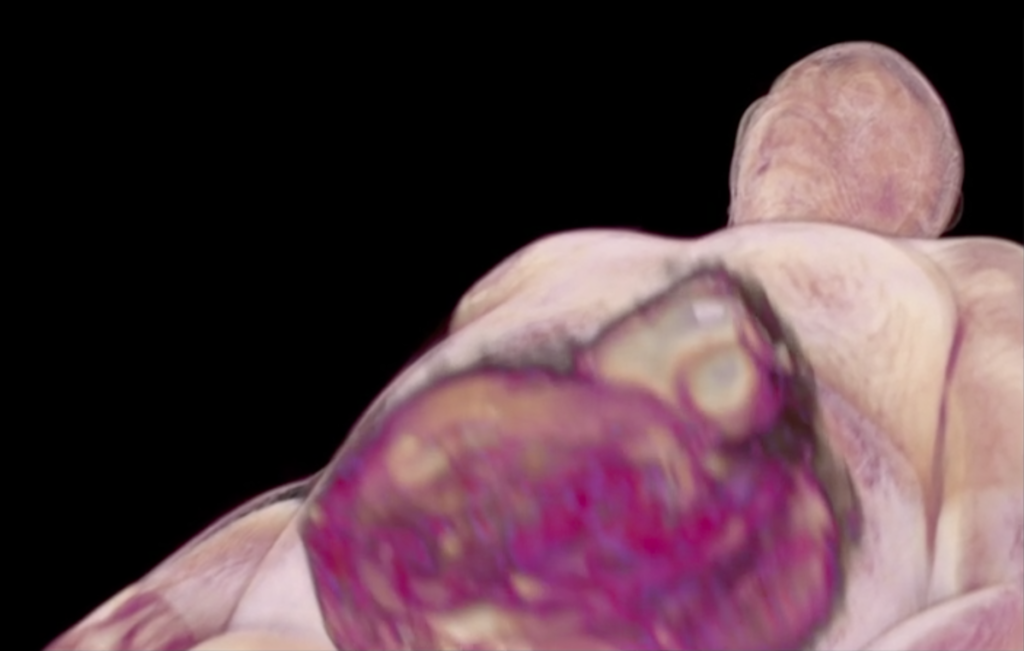

My Data Body is a collaborative, multimedia installation that include sculptures, video projection, prints and virtual reality. My Data Body brings together different forms of personal data such as medical scans, social media, biometric, banking and health data in an attempt to make visible and manipulable our many intersecting data corpuses so that in VR they can be held, inspected and dissected. In My Data Body, the medically scanned, passive, obedient, semi-transparent body becomes a data processing site that can be pulled apart, de- and re-composed or as Yuval Harari warns ‘surveilled under the skin’. My Data Body is an interdisciplinary and collaborative project made as part of the research project Know Thyself as a Virtual Reality (KTVR). Collaborators include Marilène Oliver (visual art), Scott Smallwood (sound), J.R. Carpenter (poetry) and Stephan Moore (sound). My Data Body was made with the assistance of Daniel Evans, Kirtan Shah, Walter Ostrander, Chelsey Campbell, Alissa Rossi and Jiayi Ye and has been greatly informed by the expertise of the whole KTVR research team.

Your Data Body (Work in Progress)

Your Data Body is a partner project to My Data Body made using a combination of open source and donated datasets. This project focuses on issues of data privacy and ownership, playing on the etymology of the word data meaning ‘given’. The user can pick up and move datasets around. The scanned body parts can resized and re-colored, inviting a playful stacking of the body parts to make a whole Frankenstein-like figure. Attached to each body part is an audio file which is triggered when the user holds and manipulates it. Anonymized open source datasets are accompanied by an automated voice which reads the study data published alongside the dataset, whereas the donated datasets will have different conversational AI characters/chatbot that the user can ‘discuss’ different issues relating to data ownership, privacy and virtuality with.

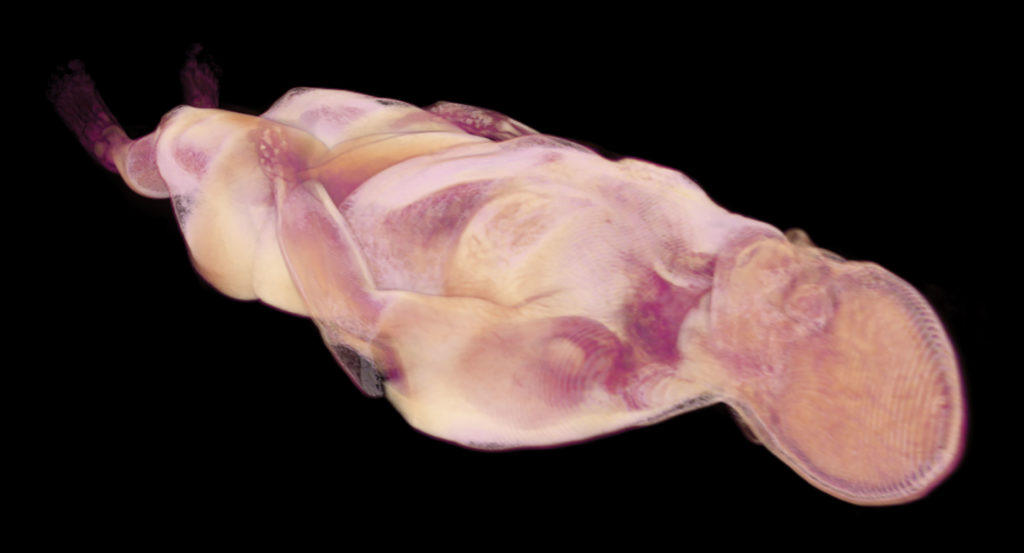

Deep Connection 2019

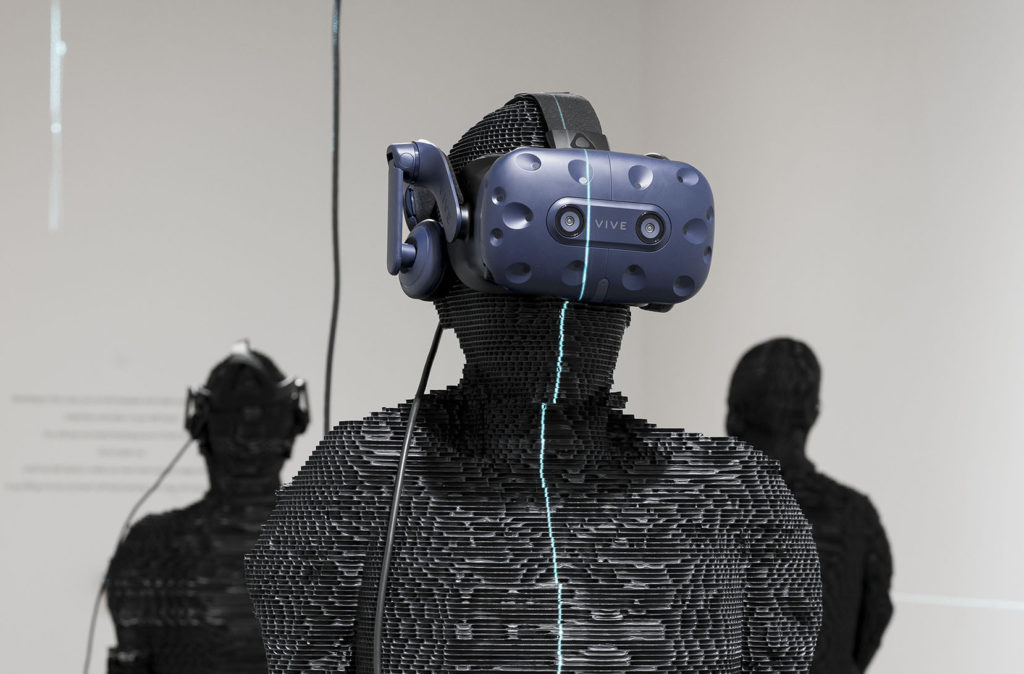

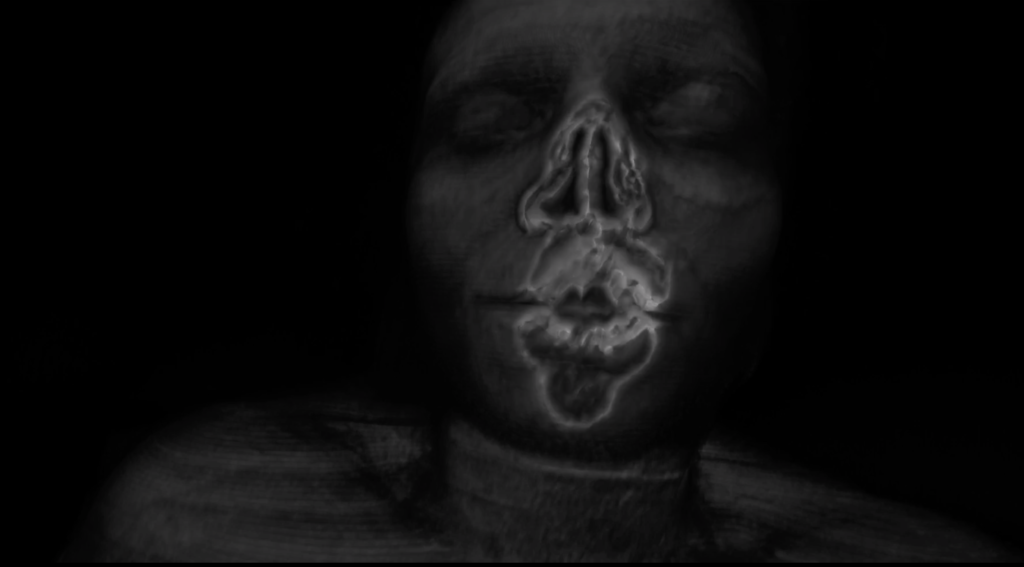

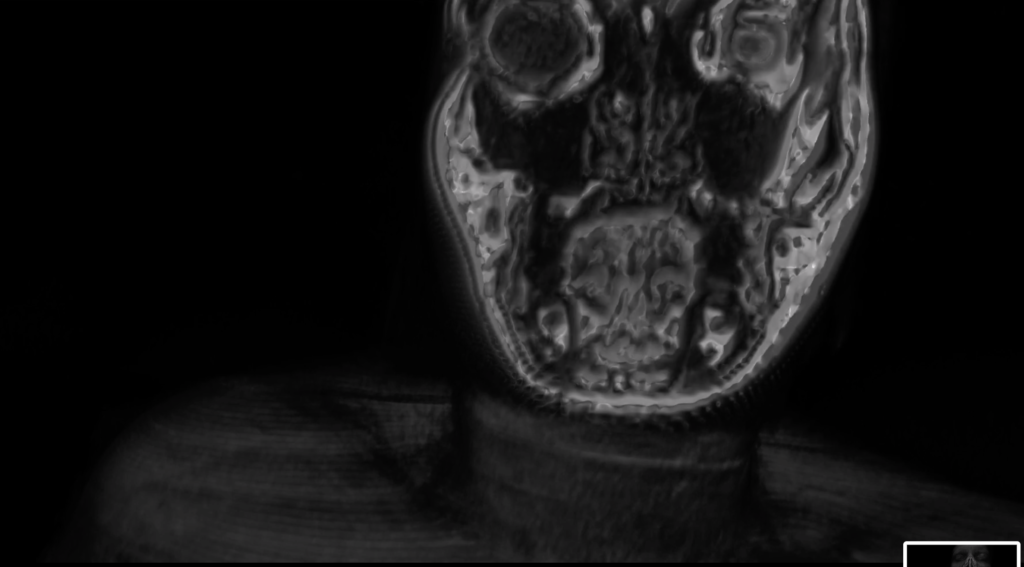

Deep Connection is an installation and VR artwork made using a full body MRI scan dataset. When the viewer enters Deep Connection, they see a scanned body lying prone in mid-air. The user can walk around the body and inspect it, lie underneath and walk through it. The user can dive inside and see its inner workings, its lungs, spine, brain. The user can take hold of the figure’s outstretched hand: holding the hand triggers a 4D dataset, making the heart beat and lungs breathe. When the user lets go the hand, the heart stops beating and the lungs stop breathing. MORE

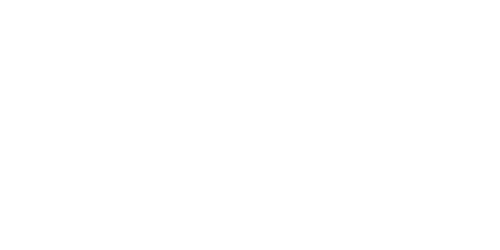

Space Invaders 2019

Space Invaders is a sculpture and VR installation that creates the uncanny experience of having our personal physical space invaded by virtual bodies. When the viewer enters the VR space, they find they are closely surrounded by 3 MR scanned figures which is it possible to move through and into. The physical body of the view becomes the interface with the virtual body. MORE

Je Te Tiens, Tu Me Tiens (work in progress)

A virtual reality art work that uses high resolution MR scan data to create an immersive experience that allows the user to enter the space of two scanned brains and explore them. Inside one brain space will be ‘memory bank’ which will follow the logic of functional neuroanatomy. The aim is that the user will be able to walk inside a dataset of a brain and with their hand activate different memories. The ‘memories’ will be visible as text paths embedded into axial cross sections through the data that will brighten and become legible when the user touches them with their virtual hand. For example, when the user enters the occipital lobe, they will see/read and hear memories related to vision. In the other brain space, a white matter tractography map will be the foundation for flow paths inside the brain. Along this these paths text will flow. The user will be able to block, disrupt or catch the text. MORE

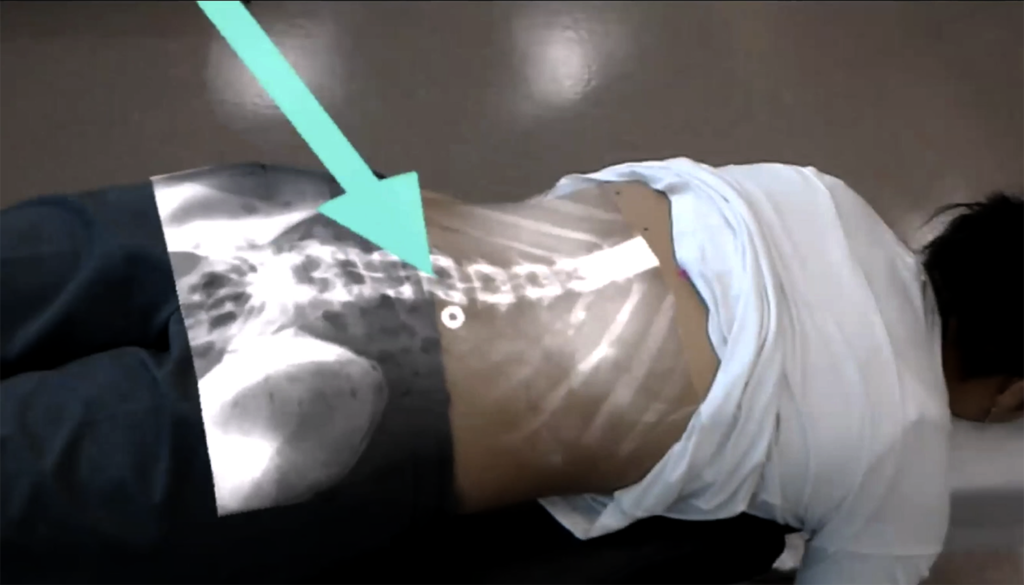

X-RAY VISION 2019

Since the discovery of ionizing radiation, clinicians have evaluated X-ray images separately from the patient. The objective of this study by Aaskov, Kawchuk et al was to investigate the accuracy and repeatability of a new technology which seeks to resolve this historic limitation by projecting anatomically correct X-ray images on to a person’s skin using a Hololens Mixed reality system. READ MORE

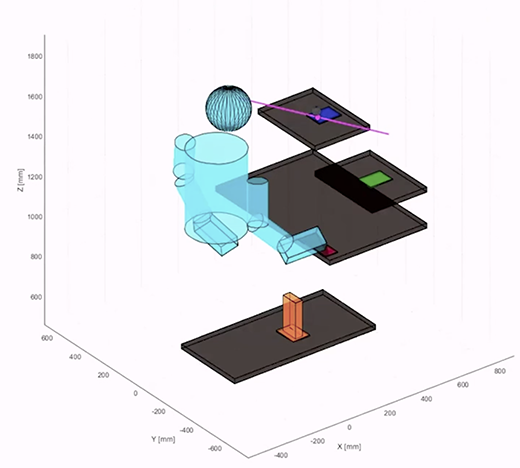

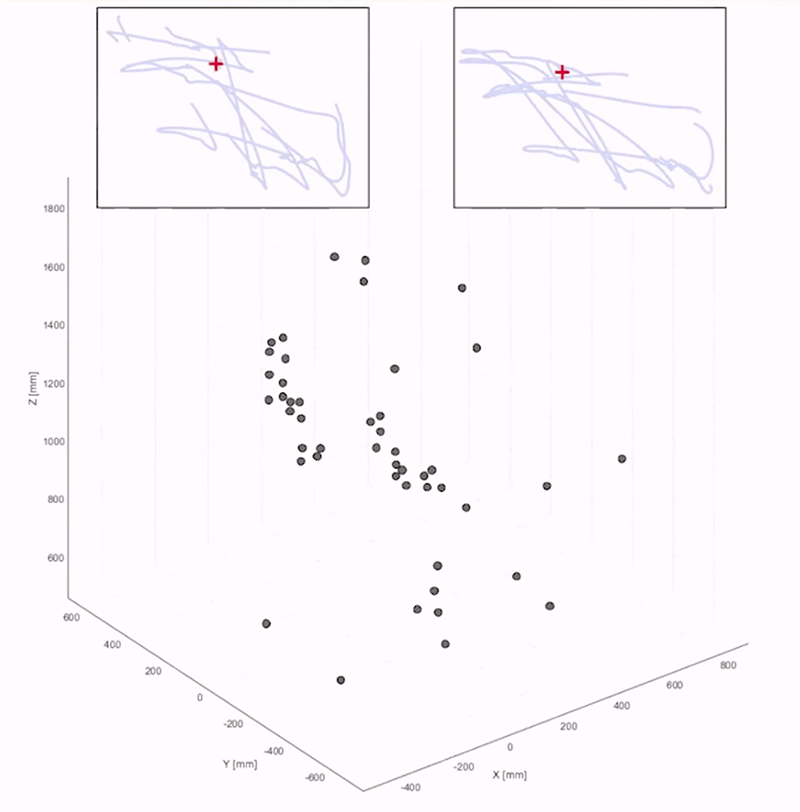

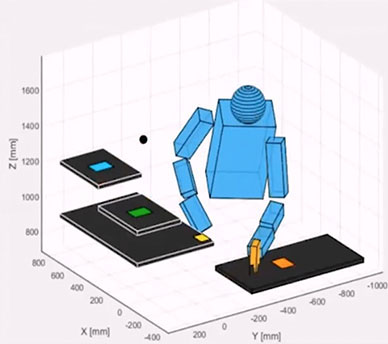

GaMA (Gaze and Movement Assessment)

Successful hand-object interactions require precise hand-eye coordination with continual movement adjustments. The Gaze and Movement Assessment (GaMA) was developed to provide protocols for simultaneous motion capture and eye track-ing during the administration of two functional tasks, along with data analysis methods to generate standard measures of visuomotor behaviour. Chapman’s team is currently developing GaMA to capture hand-eye coordination data whilst in VR. READ MORE

CogPro VR Projects

CogPro develops practical and novel tools that are complex yet captivating and easy to utilize. Many of the applications are AI powered meaning tools like Natural Language Processing enrich the interactions between user and the virtual environment. Team members Nathanial Maeda and Dr Martin Fergusson-Pell are leaders of the CogPro team and below is a selection of their projects. READ MORE

CORONA Pod 2020

Corona Pod is a virtual reality artwork created using Tilt Brush by Marilene Oliver. In VR, the pod is large enough for an adult human to stand in. Oliver created this pod during the first COVID lockdown as a place to escape, and also as a place to dare imagine the experience of her mother being alive at his time.

Archipelago by Daniel Evans

Archipelago by Daniel Evans is an explorable virtual environment created algorithmically from the contents of the artist’s Google user account. Location tracking data, activity patterns, and email are all used to generate the landscape, weather patterns, and procedural soundscape.

Huldra by Daniel Evans

Huldra by Daniel Evans is a VR experience that juxtaposes themes of resource extraction and data mining. Users connect a personal photo archive, such as Google photos, which is analyzed for monetizable information. The work makes predictions about the user’s behaviour patterns and personal life based on the contents of the images it analyzes.